Finite of Sense and Infinite of Thought: A History of Computation, Logic and Algebra, Part III

An almost metaphysical system of abstract signs, by which the motion of the hand performs the office of the mind.

Even when the connotation of a term has been accurately fixed, and still more if it has been left in the state of a vague unanalyzed feeling of resemblance; there is a constant tendency in the word, through familiar use, to part with a portion of its connotation. It is a well-known law of the mind, that a word originally associated with a very complex cluster of ideas, is far from calling up all those ideas in the mind, every time the word is used… If this were not the case, processes of thought could not take place with any thing like the rapidity which we know they possess.

… The inferences… which are successively drawn, are inferences concerning things, not symbols; although as any Things whatever will serve the turn, there is no necessity for keeping the idea of the Thing at all distinct, and consequently the process of thought may, in this case, be allowed without danger to do what all processes of thought, when they have been performed often, will do if permitted, namely, to become entirely mechanical.

… Whenever the nature of the subject permits our reasoning processes to be, without danger, carried on mechanically, the language should be constructed on as mechanical principles as possible; while, in the contrary case, it should be so constructed that there shall be the greatest possible obstacles to a merely mechanical use of it.

III. Ghostbusters

Lingua Characteristica

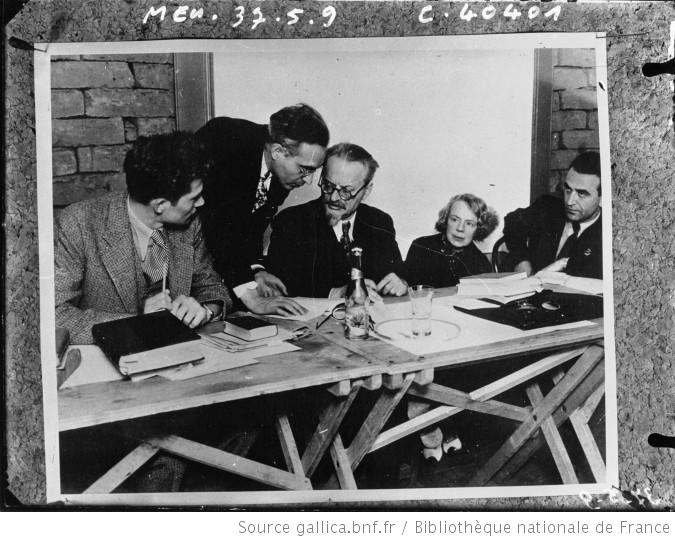

In his seminal book, From Frege to Gödel, A Source Book in Mathematical Logic, 1879-1931, the pioneering historian of logic (and the personal secratary of Leon Trotsky in exile) Jean van Heijenoort writes:

From Frege to Gödel, p. vi While some logicians agree with Heijenoort’s statements, like Quine, who wrote ‘‘[L]logic became a substantial branch of mathematics only with the emergence of general quantification theory at the hands of Frege and Peirce. I date modern logic from there,’ others paint a more nuanced picture. See Peckhaus’s Calculus Ratiocinator vs. Characteristica Universalis? The Two Traditions in Logic, Revisited. Boole, De Morgan, and Jevons are regarded as the initiators of modern logic, and rightly so. This first phase, however, suffered from a number of limitations. It tried to copy mathematics too closely, and often artificially. The multiplicity of interpretations of what became known as Boolean algebra created confusion and for a time was a hindrance rather than an advantage. Considered by itself, the period would, no doubt, leave its mark upon the history of logic, but it would not count as a great epoch. A great epoch in the history of logic did open in 1879, when Gottlob Frege’s Begriffsschrift was published. This book freed logic from an artificial connection with mathematics but at the same time prepared a deeper interrelation between these two sciences.

But Frege’s ideas were ignored and even outright rejected at the time of their introduction, and it took another to spread them. In 1889, Giuseppe Peano (1858–1932), an Italian mathematician, wrote a pamphlet called, Arithmetices principia, nova methodo exposita (The principles of arithmetic, presented by a new method).

Peano begins:

Princeps, in From Frege to Gödel, p. 85 Questions that pertain to the foundations of mathematics, although treated by many in recent times, still lack a satisfactory solution. The difficulty has its main source in the ambiguity of language.

That is why it is of the utmost importance to examine attentively the very words we use. My goal has been to undertake this examination, and in this paper I am presenting the results of my study, as well as some applications to arithmetic.

I have denoted by signs all ideas that occur in the principles of arithmetic, so that every proposition is stated only by means of these signs.

With these notations, every proposition assumes the form and the precision that equations have in algebra; from the propositions thus written other propositions are deduced, and in fact by procedures that are similar to those used in solving equations. This is the main point of the whole paper.

Heijenoort writes that

From Frege to Gödel, p. 84 The ease with which we read Peano’s booklet today shows how much of his notation has found its way, either directly or in a somewhat modified form, into contemporary logic.

Jean van Heijenoort, left, with Leon Trotsky (middle) and his wife, Natalia Sedova, in Mexico, 1937 (Source: Wikipedia)… There is, however, a grave defect. The formulas are simply listed, not derived; and they could not be derived, because no rules of inference are given. Peano introduces a notation for substitution but does not state any rule. What is far more important, he does not have any rule that would play the role of the rule of detachment. The result is that, for all his meticulousness in the writing of formulas, he has no logic that he can use… What is presented as a proof is actually a list of formulas that are such that, from the point of view of the working mathematician, each one is very close to the next. But, however close two successive formulas may be, the logician cannot pass from one to the next because of the absence of rules of inference. The proof does not get off the ground.

… [T]he proof … cannot be carried out by a formal procedure; it requires some intuitive logical argument, which the reader has to supply. [This] brings out the whole difference between an axiomatization, even written in symbols and however careful it may be, and a formalization.

… Peano’s writings, of minor significance for logic proper, showed how mathematical theories can be expressed in one symbolic language. These writings rapidly gained a wide influence and greatly contributed to the diffusion of the new ideas.

In 1892, Peano proclaimed he would embark on an ambitious project, which he called Furmulario Mathematico, to publish all known mathematical theorems in his symbolic language. He published five volumes and even bought a small printing office and studied the craft in order to serve his goal. Murawski writes that,Mechanization of Reasoning, Kindle Locations 2867-2872 “One of the results of the project was a further simplification of the mathematical symbolism. Peano treated Formulario as a fulfilment of Leibniz’s ideas. He wrote: ‘After two centuries, this dream of the inventor of the infinitesimal calculus has become a reality… We now have the solution to the problem proposed by Leibniz’. He hoped that Formulario would be widely used by professors and students. He himself immediately began to use it in his classes. But the response was very weak. One reason was the fact that almost everything was written there in symbols, the other was the usage of latino sine flexione (that is Latin without inflection — an artificial international language invented by Peano…) for explanations and commentaries.”

“As for Frege,” Heijenoort writesFrom Frege to Gödel, p. 83 Peano learned of his work immediately after the publication of Arithmetices principia.”

Mechanization of Reasoning, Kindle Locations 2881-2894 The influence of Peano and his writings on the development of mathematical logic was great. In the last decade of the 19th century he and his school played first fiddle. Later the center was moved to England where B[ertrand] Russell was active — but Peano has played also here an important role. Russell met Peano at the International Philosophical Congress in Paris in August 1900 and later he wrote about this meeting in the following way:

A portrait of Bertrand Russell by Renee Bolinger (source: the artist’s website; used with permission)The Congress was a turning point in my intellectual life, because I met there Peano. I already knew him by name and had seen some of his work, but had not taken the trouble to master his notation. In discussions at the Congress I observed that he was always more precise than anyone else, and that he invariably got the better of any argument upon which he embarked. As the days went by, I decided that this must be owing to his mathematical logic. I therefore got him to give me all his works, and as soon as the Congress was over I retired to Fernhurst to study quietly every word written by him and his disciples. It became clear to me that his notation afforded an instrument of logical analysis such as I had been seeking for years, and that by studying him I was acquiring a new and powerful technique for the work I had long wanted to do.

In a letter to Jourdain from 1912 B. Russell wrote: “Until I got hold of Peano, it had never struck me that Symbolic Logic would be any use for the Principles of mathematics, because I knew the Boolean stuff and found it useless. It was Peano’s, together with the discovery that relations could be fitted into his system, that led me to adopt symbolic logic.” It should be admitted also that Russell learned of Frege and his works just through Peano.

Just prior to meeting Peano in Paris, Russell published A Critical Exposition of the Philosophy of Leibniz,A Critical Exposition of the Philosophy of Leibniz, 1900 the first modern study of Leibniz’s work in logic. It was through Bertrand Russell (1872 – 1970) and his famous work with Alfred North Whitehead (1861-1947), the Principia Methematica, published in in 1910-1913 and continued Frege’s ideas, that the world learned and accepted Frege’s work.

As his first foray into logic, in 1879, Gottlob Frege (1848-1925) published Begriffsschrift, eine der arithmetischen nachgebildete Formelsprache des reinen Denkens (Ideography: A Formal Language for Pure Thought Modeled on that of Arithmetic), on which Heijenoort writes, From Frege to Gödel, p. 1 “This is the first work that Frege wrote in the field of logic, and … it is perhaps the most important single work ever written in logic. Its fundamental contributions, among lesser points, are the truth-functional propositional calculus, the analysis of the proposition into function and argument(s) instead of subject and predicate, [and] the theory of quantification.”

The Begriffsschrift — literally, “concept-writing” but usually translated to English as “ideography” — was Frege’s attempt to address the ambiguities of natural language.

Begriffsschrift, a formula language modeled upon that of arithmetic, for pure thought, 1879, in From Frege to Gödel, pp. 5-7 In apprehending a scientific truth we pass, as a rule, through various degrees of certitude. Perhaps first conjectured on the basis of an insufficient number of particular cases, a general proposition comes to be more and more securely established by being connected with other truths through chains of inferences, whether consequences are derived from it that are confirmed in some other way or hether, conversely, it is seen to be a consequence of propositions already established. Hence we can inquire, on the one hand, how we have gradually arrived at a given proposition and, on the other, how we can finally provide it with the most secure foundation…

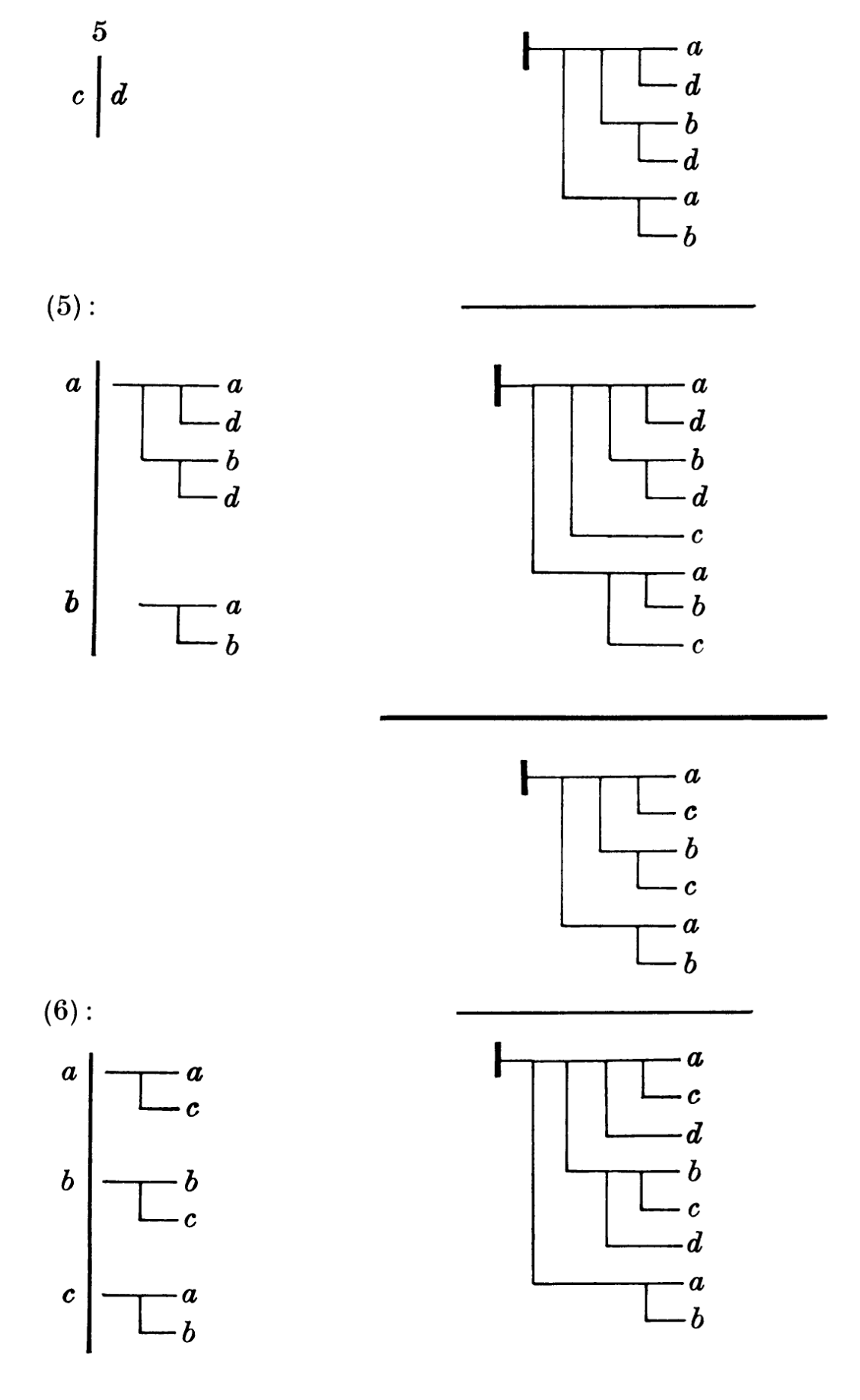

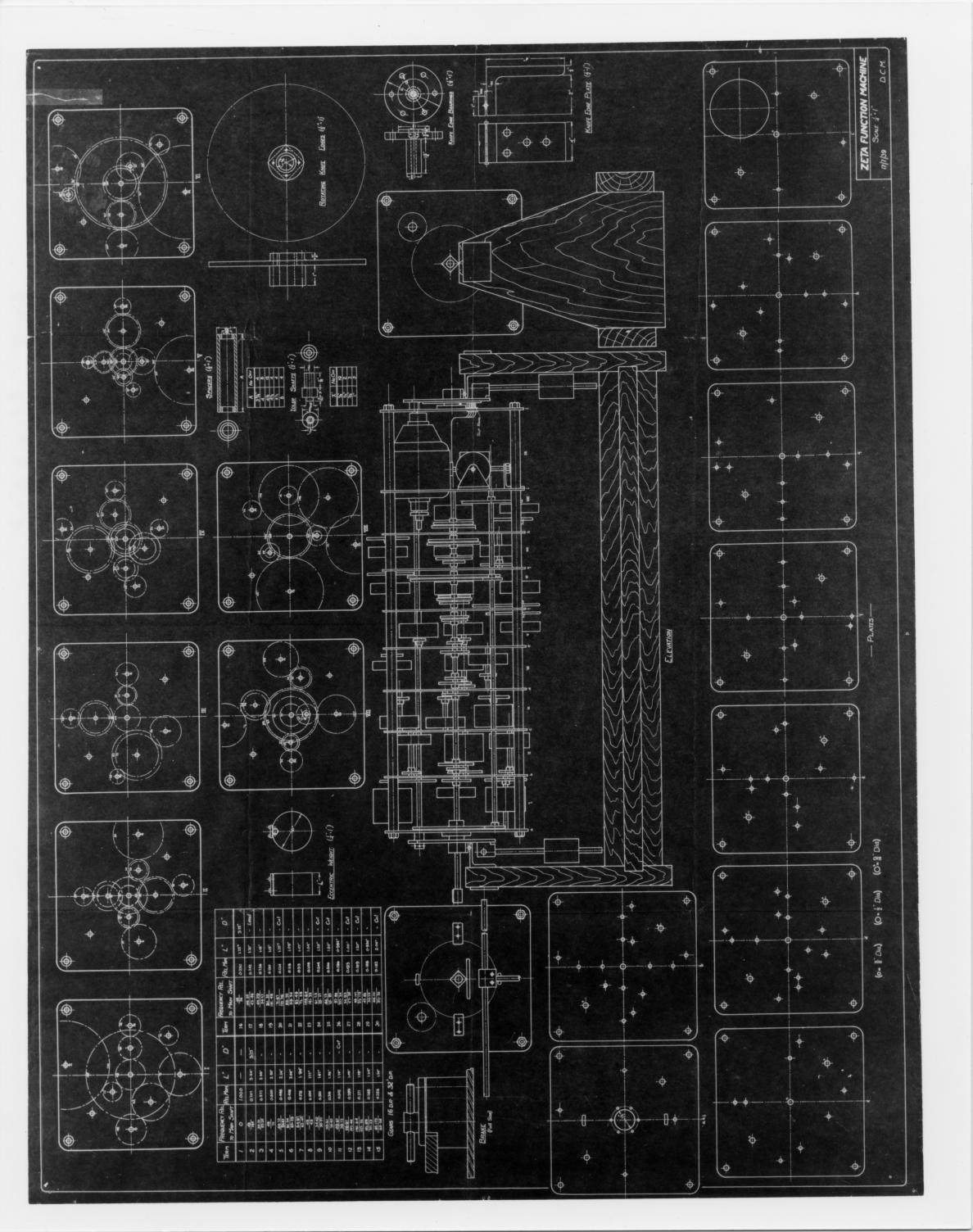

Inference in Frege’s Begriffsschrift, from Begriffsschrift, a formula language modeled upon that of arithmetic, for pure thought. While Frege’s Begriffsschrift’s abstract syntax was that of the familiar predicate calculus, its concrete syntax was two-dimensional — it is, in fact, a syntax tree (and thus not requiring parentheses and operator precedence) — resembling a circuit diagram. A proposition was introduced with a horizontal line, as in $- a$, while the judgment that $a$ is true was marked by the addition of a vertical bar, as in $\vdash a$. Vertical branches represented disjunction, small vertical notches on the lines represented negation, and universal quantification was represented by an indentation with the name of the quantified variable, as in . Conjunction and existential quantification were not primitive, but represented by the proper application of negation. Unlike the logic itself, the notation was never accepted, but some have argued that it demonstrates ingenious design.The most reliable way of carrying out a proof, obviously, is to follow pure logic, a way that, disregarding the particular characteristics of objects, depends solely on those laws upon which all knowledge rests. Accordingly, we divide all truths that require justification into two kinds, those for which the proof can be carried out purely by means of logic and those for which it must be supported by facts of experience. But that a proposition is of the first kind is surely compatible with the fact that it could nevertheless not have come to consciousness in a human mind without any activity of the senses. Hence it is not the psychological genesis but the best method of proof that is at the basis of the classification. Now, when I came to consider the question to which of these two kinds the judgments of arithmetic belong, I first had to ascertain how far one could proceed in arithmetic by means of inferences alone, with the sole support of those laws of thought that transcend all particulars… I found the inadequacy of language to be an obstacle; no matter how unwieldy the expressions I was ready to accept, I was less and less able, as the relations became more and more complex, to attain the precision that my purpose required. This deficiency led me to the idea of the present ideography. Its first purpose, therefore, is to provide us with the most reliable test of the validity of a chain of inferences and to point out every presupposition that tries to sneak in unnoticed, so that its origin can be investigated. That is why I decided to forgo expressing anything that is without significance for the inferential sequence. … I called what alone mattered to me the conceptual content. Hence this definition must always be kept in mind if one wishes to gain a proper understanding of what my formula language is. That, too, is what led me to the name “Begriffsschrift” [ideography or concept language]. Since I confined myself for the time being to expressing relations that are independent of the particular characteristics of objects, I was also able to use the expression “formula language for pure thought”. That it is modeled upon the formula language of arithmetic, as I indicated in the title, has to do with fundamental ideas rather than with details of execution. Any effort to create an artificial similarity by regarding a concept as the sum of its marks [Merkmale] was entirely alien to my thought. The most immediate point of contact between my formula language and that of arithmetic is the way in which letters are employed.

… I believe that I can best make the relation of my ideography to ordinary language clear if I compare it to that which the microscope has to the eye. Because of the range of its possible uses and the versatility with which it can adapt to the most diverse circumstances, the eye is far superior to the microscope. Considered as an optical instrument, to be sufre, it exhibits many imperfections, which ordinarily remain unnoticed only on account of its intimate connection with our mental life. But, as soon as scientific goals demand great sharpness of resolution, the eye proves to be insufficient. The microscope, on the other hand, is perfectly suited to precisely such goals, but that is just why it is useless for all others.

This ideography, likewise, is a device invented for certain scientific purposes, and one must not condemn it because it is not suited to others. If it answers to these purposes in some degree, one should not mind the fact that there are no new truths in my work. I would console myself on this point with the realization that a development of method, too, furthers science. Bacon, after all, thought it better to invent a means by which everything could easily be discovered than to discover particular truths, and all great steps of scientific progress in recent times have had their origin in an improvement of method.

Leibniz, too, recognized—and perhaps overrated—the advantages of an adequate system of notation. His idea of a universal characteristic, of a calculus philosophicus or ratiocinator, was so gigantic that the attempt to realize it could not go beyond the bare preliminaries. The enthusiasm that seized its originator when he contemplated the immense increase in the intellectual power of mankind that a system of notation directly appropriate to objects themselves would bring about led him to underestimate the difficulties that stand in the way of such an enterprise. But, even if this worthy goal cannot be reached in one leap, we need not despair of a slow, step-by-step approximation. When a problem appears to be unsolvable in its full generality, one should temporarily restrict it; perhaps it can then be conquered by a gradual advance. It is possible to view the signs of arithmetic, geometry, and chemistry as realizations, for specific fields, of Leibniz’s idea. The ideography proposed here adds a new one to these fields, indeed the central one, which borders on all the others. If we take our departure from there, we can with the greatest expectation of success proceed to fill the gaps in the existing formula languages, connect their hitherto separated fields into a single domain, and extend this domain to include fields that up to now have lacked such a language.

… If it is one of the tasks of philosophy to break the domination of the word over the human spirit by laying bare the misconceptions that through the use of language often almost unavoidably arise concerning the relations between concepts and by freeing thought from that with which only the means of expression of ordinary language, constituted as they are, saddle it, then my ideography, further developed for these purposes, can become a useful tool for the philosopher.

… The mere invention of this ideography has, it seems to me, advanced logic. I hope that logicians, if they do not allow themselves to be frightened off by an initial impression of strangeness, will not withhold their assent from the innovations that, by a necessity inherent in the subject matter itself, I was driven to make. These deviations from what is traditional find their justification in the fact that logic has hitherto always followed ordinary language and grammar too closely. In particular, I believe that the replacement of the concepts subject and predicate by argument and function, respectively, will stand the test of time. It is easy to see how regarding a content as a function of an argument leads to the formation of concepts. Furthermore, the demonstration of the connection between the meanings of the words if, and, not, or, there is, some, all, and so forth, deserves attention.

The Begriffschrift contains all the familiar characteristics of formal logic as we know it today: connectives, variables, relations, quantifiers, and — most importantly — syntactic inference rules by which deduction is performed.

Frege states that his goal in creating a language for “pure thought” (i.e., thought that does not interact with the senses) is finding the best proof method, and not providing a psychological description of the mind’s operation. He expanded on what is meant by “laws of thought” in a later work:

The Basic Laws of Arithmetic, xv, p. 12-13, 1893 And this brings me to what stands in the way of the influence of my book among logicians: namely, the corrupting incursion of psychology into logic. Our conception of the laws of logic is necessarily decisive for our treatment of the science of logic, and that conception in turn is connected with our understanding of the word “true”. It will be granted by all at the outset that the laws of logic ought to be guiding principles for thought in the attainment of truth, yet this is only too easily forgotten, and here what is fatal is the double meaning of the word “law”. In one sense a law asserts what is; in the other it prescribes what ought to be. Only in the latter sense can the laws of logic be called ‘laws of thought’: so far as they stipulate the way in which one ought to think. Any law asserting what is, can be conceived as prescribing that one ought to think in conformity with it, and is thus in that sense a law of thought. This holds for laws of geometry and physics no less than for laws of logic. The latter have a special title to the name “laws of thought” only if we mean to assert that they are the most general laws, which prescribe universally the way in which one ought to think if one is to think at all. But the expression “law of thought” seduces us into supposing that these laws govern thinking in the same way as laws of nature govern events in the external world. In that case they can be nothing but laws of psychology: for thinking is a mental process. And if logic were concerned with these psychological laws it would be a part of psychology;… is reduced to individuals’ taking something to be true. All I have to say to this is: being true is different from being taken to be true, whether by one or many or everybody, and in no case is to be reduced to it.

Frege’s formal notation marked a sharp departure from the algebraic school begun with George Boole. Instead of equations and reasoning by substitution and elimination, formal reasoning proceeds by syntactic inference rules more elaborate than those offered by algebra. And while it would prove to be the innovation that would ultimately make formal logic a serious object of study with the publication of the Principia Mathematica, it did not go over well with members of that tradition like Ernst Schröder (1841-1902):

Review of Frege’s Conceptual Notation This very unusual book—obviously the original work of an ambitious thinker with a purely scientific turn of mind—pursues a course to which the reviewer is naturally highly sympathetic, since he himself has made similar investigations. The present work promises to advance toward Leibniz’s ideal of a universal language, which is still very far from its realization despite the great importance laid upon it by that brilliant philosopher!

The fact that a completed universal language, characteristic, or general conceptual notation {allgemeine Begriffschrift} does not exist even today justifies my trying to say from the beginning what is to be understood by it. I almost want to say, “it is a risk to state [what a completed universal language would be like]”; for, as history teaches, in the further pursuit of such ideals, we often find ourselves led to modify the original ones very significantly; especially once we have succeeded in advancing substantially toward [our goal].

… Even if, in spite of all earlier attempts and also the latest one now under discussion, the idea of a universal language has not yet been realized in a nearly satisfactory sense; it is still the case that the impossibility of the undertaking has not come to light. On the contrary, there is always hope, though remote, that by making existing scientific technical language {wissenschaftliche Kunstsprache} precise, or by developing a special such language, we may gain a firm foundation by means of which it would someday become possible to emerge from the confusion of philosophical controversies, terminologies, and systems whose conflict or disagreement is to be mainly attributed (as indeed can be generally seen) to the lack of definiteness of the basic concepts. The blame must be placed almost entirely upon the imperfections of the language in which we are forced to argue from the outset.

Given the sense [of ‘conceptual notation’] which I sought to indicate in the above remarks, it must be said that Frege’s title, Conceptual Notation, promises too much—more precisely, that the title does not correspond at all to the content [of the book]. Instead of leaning toward a universal characteristic, the present work (perhaps unknown to the author himself) definitely leans toward Leibniz’s “calculus ratiocinator”. In the latter direction, the present little book makes an advance which I should consider very creditable, if a large part of what it attempts had not already been accomplished by someone else, and indeed (as I shall prove) in a doubtlessly more adequate fashion.

The book is clearly and refreshingly written and also rich in perceptive comments. The examples are pertinent; and I read with genuine pleasure nearly all the secondary discussions which accompany Frege’s theory; for example, the excellently written Preface. On the other hand, I can pass no such unqualified judgement upon the major content—the formula notation itself.

… First of all, I consider it a shortcoming that the book is presented in too isolated a manner and not only seeks no serious connection with achievements that have been made in essentially similar directions (namely those of Boole), but even disregards them entirely. The only comment that the author makes which is remotely concerned with [Boole’s achievements] is the statement… which reads, “Any effort to create an artificial similarity by regarding a concept as the sum of its marks [Merkmale] was entirely ali en to my thought.”I have copied the translation used in the above excerpt from Frege. This comment even by itself lends a certain probability to the supposition-which gains confirmation in other ways- that the author has an erroneous low opinion of “those efforts” simply because he lacks knowledge of them.

… However, the comment (which I shall prove below) that might contribute most effectively to the correction of opinions is that the Fregean “conceptual notation” does not differ so essentially from Boole’s formula language … With the exception of what is said on pages 15-22 about “function” and “generality” … the book is devoted to the establishment of a formula language, which essentially coincides with Boole’s mode of presenting judgements and Boole’s calculus of judgements, and which certainly in no way achieves more.

With regard to its major content, the “conceptual notation” could be considered actually a transcription of the Boolean formula language. With regard to its form, though, the former is different beyond recognition—and not to its advantage. As I have said already it was without doubt developed completely independently—all too independently!

If the author’s notation does have an advantage over the Boolean one, which eluded me, it certainly also has a disadvantage. I think that to anyone who is familiar with both, [the author’s notation] must above all give the impression of hiding—to be sure not intentionally, but certainly “artificially”—the many beautiful, real, and genuine analogies which the logical formula language naturally bears with regard to the mathematical one.

In the subtitle, “A Formula Language Modelled Upon that of Arithmetic”, I find the very point in which the book corresponds least to its advertised program, but in which a much more complete correspondence could be attained—precisely by means of the neglected emulation of previous works. If, to the impartial eye, the “modelling” appears to consist of nothing more than using letters in both cases, then it seems to me this does not sufficiently justify the epithet used.

In other words, that same correspondence between abstract algebra (or its modern generalization in category theory), and formal logic, was taken by Schröder as evidence for the complete superfluousness of the latter, to which Frege responds, of course they correspond; Boole and I invented both to discuss the same thing! Nevertheless, there can be value in different forms of notation:

On the Purpose of the Begriffsschrift, 1882 Among other things I stand reproached with having left the works of Boole out of account. E. Schröder is one of those who offer this reproach: comparing my Begriffsschrift with the Boolean formula-language [Formelsprache] in his review (Zeitschr./. Math. u. Phys., Vol. 25), he comes to the conclusion that the latter is to be preferred in every respect. …

Regarding the aforementioned reproach I should like to remark that in the twenty years and more since it was devised the Boolean formula-language has by no means achieved such a signal success that to abandon henceforth the foundations it laid must be regarded as foolish, or that anything except a further development is out of the question. The problems Boole deals with, after all, seem for the most part to have been first thought up in order to be solved by means of his formulas.

Above all, though, that reproach overlooks the fact that my purpose was quite other than Boole’s. I was not trying to present an abstract logic in formulas; I was trying to express contents in an exacter and more perspicuous manner than is possible in words, by using written symbols. I was trying, in fact, to create a ‘lingua characteristica’ in the Leibnizian sense, not a mere ‘calculus ratiocinator‘—not that I do not recognize such a deductive calculus as a necessary constituent of a Begriffsschrift. If this has been misunderstood, perhaps it is because I concentrated overmuch on the abstract logic aspect in my exposition.

… Boole distinguishes primary propositions from secondary propositions.See The Monist in Part 2. The former compare concepts in respect of their extensions; the latter expression relations between possible contents of judgment. This classification is unsatisfactory since it provides no place for existential judgments. Let us take the primary propositions first. Here the letters stand for extensions of concepts. Individual things as such cannot be designated, and this is a considerable shortcoming of the Boolean formula-language; for even if only a single thing is comprehended by a concept, there is still always a vast difference between the concept and that thing.

… So consequential are the differences between logical and mathematical calculation that the solving of logical equations, which Boole is especially concerned with, has scarcely anything in common with the solving of algebraic ones. The subordination of one concept to another can now be expressed thus:

A = A . B

If e.g. A stands for the extension of the concept ‘mammal’ and B for that of the concept ‘airbreathing’, then the equation means the extensions of the concepts ‘mammal’ and ‘airbreathing mammal’ are equal; i.e.: all mammals are airbreathing. The falling of an individual under a concept, which is entirely different from the subordination of one concept to another, has no special expression in Boole: indeed, strictly speaking, it has no expression at all. Everything thus far is already to be found, with only superficial divergences, in Leibniz—of whose works relevant to this subject Boole knew nothing. In Boole, 0 designates the extension of a concept under which nothing falls; 1 stands for the extension of a concept under which falls everything which is directly under discussion (universe of discourse). We see that the meanings of these signs, too, and especially that of 1, diverge from their arithmetical ones. Leibniz has “non ens” and “ens” instead of them.

… Surveying the Boolean formula-language as a whole, we recognise that it is abstract logic clothed in the garments of algebraic symbols; it is not adapted to the reproduction of a content—nor is that its purpose. And just this is my intention. I want to fuse together the few signs I introduce, with the symbols of mathematics already at hand, into a single formula- language. In it the existing symbols correspond roughly to the roots of words in ordinary language, while the signs I have added are comparable to the word endings and particles which impose logical relations upon the contents contained in those roots.

I could not use the Boolean notation for this, for it is out of the question to have the + sign, for instance, occurring in one and the same formula with now a logical and now an arithmetical sense. The analogy, prized by Boole, between the logical and the arithmetical methods of calculation, can issue only in confusion if the two are brought into relation with each other. Boole’s sign-language is conceivable only in total separation from arithmetic.

Hence I had to invent other symbols for the logical relations. Schröder says that my Begriffsschrift has almost nothing in common with the Boolean calculus of concepts, but that it does have something in common with the Boolean calculus of judgmentsHe means the interpretation of the Boolean algebra where variables denote classes versus the one where they denote propositions. . In fact, one of the most important ways in which my interpretation differs from the Boolean (and, I might add, from the Aristotelian) is that I take judgments and not concepts as my starting-point though this is by no means to say that I am unable to express the relation of subordination between concepts.

… Schröder proceeds everywhere in his criticism from a direct comparableness of the Begriffsschrift with the Leibniz-Boole formula-language — a comparableness which is not to be had. His most effective contribution towards putting things to fights, he considers, is his observation that the two systems of notation are not essentially different, since it is possible to translate from one into the other. But this proves nothing. If the same subject-matter can be presented in two systems of symbols it follows automatically that translation or transcription from one to the other is possible. From this possibility, conversely, nothing more follows than the presence of a common subject-matter: the systems of symbols can still be basically different.

… I regard this notation [of the universal quantifier] as one of the most important features of my Begriffschrift, and one in respect of which it has a considerable advantage over Boole’s mode of expression, even as a mere representation of the logical forms. By its means the Boolean artifice is replaced by an organic relationship between primary and secondary propositions.

It is interesting that Frege points out a difference between the algebraic and the formal logic approach that lasts to this day: Whereas algebras (in general, and of logic in particular) talk about concepts (collections of objects, but this can be also be taken to mean any mathematical object which could be considered made of individuals, like functions that can be applied at a point) in a restricted universe of discourse and treat them as indivisible, formal logic can also talk about the individual through the use of quantifiers. Whether or not this is preferable depends, of course, on context and usage.The mathematician Terence Tao has described algebra essentially as a clever hiding of specific uses of quantifiers.

In an in-depth, nuanced discussion of the Frege-Schröder debate and their respective understanding of the Leibnizian program, Volker Peckhaus writes:

Calculus Ratiocinator vs. Characteristica Universalis? The Two Traditions in Logic, Revisited, p. 10 [B]oth Frege and Schröder, criticized the concurring system as being a mere calculus ratiocinator. Both claimed, on the other hand, that their own system was the better realization of the Leibnizian idea of a characteristica universalis. Both accept that a language would require both elements, and both aim at such a language. Schröder’s algebraic attitude requiring an external semantics seems to be closer to the original Leibnizian idea of a lingua rationalis. Furthermore, the universality of a universal characteristics is not bound to modern quantification theory.

But the fundamental difference between Frege and Boole is one of goals. Whereas Boole wanted to use algebra in order to subsume logic into mathematics, Frege wanted to create a logical foundation upon which mathematics can be established:

The Foundation of Arithmetic, 1884, pp. xv-xx It is sad and discouraging to observe how discoveries once made are always threatening to be lost again … and how much work promises to have been done in vain, because we fancy ourselves so well off that we need not bother to assimilate its results. My work too, as I am well aware, is exposed to this risk. A typical crudity confronts me, when I find calculation described as “aggregative mechanical thought”. I doubt whether there exists any thought whatsoever answering to this description. … Thought is in essentials the same everywhere: it is not true that there are different kinds of laws of thought to suit the different kinds of objects thought about. Such differences as there are consist only in this, that the thought is more pure or less pure, less dependent or more upon psychological influences and on external aids such as words or numerals, and further to some extent too in the finer or coarser structure of the concepts involved; but it is precisely in this respect that mathematics aspires to surpass all other sciences, even philosophy.

The present work will make it clear that even an inference like that from n to n + 1, which on the face of it is peculiar to mathematics, is based on the general laws of logic, and that there is no need of special laws for aggregative thought. It is possible, of course, to operate with figures mechanically, just as it is possible to speak like a parrot: but that hardly deserves the name of thought. It only becomes possible at all after the mathematical notation has, as a result of genuine thought, been so developed that it does the thinking for us, so to speak.

… We suppose, it would seem, that concepts sprout in the individual mind like leaves on a tree, and we think to discover their nature by studying their birth: we seek to define them psychologically, in terms of the nature of the human mind. But this account makes everything subjective, and if we follow it through to the end, does away with truth. What is known as the history of concepts is really a history either of our knowledge of concepts or of the meanings of words. Often it is only after immense intellectual effort, which may have continued over centuries, that humanity at last succeeds in achieving knowledge of a concept in its pure form, in stripping off the irrelevant accretions which veil it from the eyes of the mind. What, then, are we to say of those who, instead of advancing this work where it is not yet completed, despise it, and betake themselves to the nursery, or bury themselves in the remotest conceivable periods of human evolution, there to discover, like John Stuart Mill, some gingerbread or pebble arithmetic! It remains only to ascribe to the flavour of the bread some special meaning for the concept of number. A procedure like this is surely the very reverse of rational, and as unmathematical, at any rate, as it could well be. No wonder the mathematicians turn their backs on it. Do the concepts, as we approach their supposed sources, reveal themselves in peculiar purity?Frege may be criticizing the very goal of my text, but sometimes, after the concepts have been purified so much that their origins are forgotten, traveling back to their source — like tracing the evolution of the eye — may reveal exactly which stages of development were most crucial to which aspect of the concept, and so may reveal some possible paths forward. Not at all; we see everything as through a fog, blurred and undifferentiated. It is as though everyone who wished to know about America were to try to put himself back in the position of Columbus, at the time when he caught the first dubious glimpse of his supposed India. Of course, a comparison like this proves nothing; but it should, I hope, make my point clear. It may well be that in many cases the history of earlier discoveries is a useful study, as a preparation for further researches; but it should not set up to usurp their place.

The Foundation of Arithmetic, pp. 21-22 … Statements in Leibniz can only be taken to mean that the laws of number are analytic, as was to be expected, since for him the a priori coincides with the analytic. Thus he declares that the benefits of algebra are due to its borrowings from a far superior science, that of the true logic. In another passage he compares necessary and contingent truths to commensurable and incommensurable magnitudes, and maintains that in the case of necessary truths a proof or reduction to identities is possible. However, these declarations lose some of their force in view of Leibniz’s inclination to regard all truths as provable: “Every truth”, he says, “has its proof a priori derived from the concept of the terms, notwithstanding it does not always lie in our power to achieve this analysis.”

… To quote Mill: “The doctrine that we can discover facts, detect the hidden processes of nature, by an artful manipulation of language, is so contrary to common sense, that a person must have made some advances in philosophy to believe it.”

Very true—if it be supposed that during the artful manipulation we do not think at all. Mill is here criticizing a kind of formalism that scarcely anyone would wish to defend. Everyone who uses words or mathematical symbols makes the claim that they mean something, and no one will expect any sense to emerge from empty symbols. But it is possible for a mathematician to perform quite lengthy calculations without understanding by his symbols anything intuitable, or with which we could be sensibly acquainted. And that does not mean that the symbols have no sense; we still distinguish between the symbols themselves and their content, even though it may be that the content can only be grasped by their aid. We realize perfectly that other symbols might have been arranged to stand for the same things. All we need to know is how to handle logically the content as made sensible in the symbols and, if we wish to apply our calculus to physics, how to effect the transition to the phenomena.

The Foundation of Arithmetic, p. 99 … I hope I may claim in the present work to have made it probable that the laws of arithmetic are analytic judgements and consequently a priori. Arithmetic thus becomes simply a development of logic, and every proposition of arithmetic a law of logic, albeit a derivative one. To apply arithmetic in the physical sciences is to bring logic to bear on observed facts; calculation becomes deduction. The laws of number will not… need to stand up to practical tests if they are to be applicable to the external world; for in the external world, in the whole of space and all that therein is, there are no concepts, no properties of concepts, no numbers. The laws of number, therefore, are not really applicable to external things; they are not laws of nature. They are, however, applicable to judgements holding good of things in the external world: they are laws of the laws of nature. They assert not connexions between phenomena, but connexions between judgements; and among judgements are included the laws of nature.

Frege created a new field of study in logic — the foundations of mathematics — which seeks to construct formal systems — logics — which can describe all of known mathematics, a field of study that was first fully realized in Russell and Whitehead’s Principia. In his precise foundation, he used sets, as studied by Georg Cantor (1845-1918) in his set theory, to precisely model Leibniz’s “concepts”, Euler’s “notions” and Boole’s “classes”.

Unfortunately, Frege’s invention of formal logic did not bring about Leibniz’s dream of an end to human quarrel, even in matters of mathematics. The foundations of mathematics were grounds to famous disputes in the early twentieth century, as questions regarding the philosophy of mathematics turned out to be essential in the choice of axioms of the new formalisms. The philosophy of mathematics is a big and serious topic, one that is, unfortunately, almost always misrepresented when discussed online, and that I am certain to misrepresent here. My goal is simply to present the kind of disputes that arose, as they’re a result of a fundamental feature of Frege’s entire program, and one which was addressed in subsequent work on computation.

Several schools of thoughts emerged. The first, Frege and Russell’s, was that mathematics can be reduced to logic, meaning, a few self-evident logical axioms entail all of mathematics, and its truths stem from the obvious truths of logic (this view is evident in Frege’s words, “even an inference like that from n to n + 1, which on the face of it is peculiar to mathematics, is based on the general laws of logic, and that there is no need of special laws for aggregative thought”The Foundation of Arithmetic, p. xvi ); Heijenoort’s book, From Frege to Gödel contains several primary sources pertaining to this controversy: Brouwer’s On the Significance of the Principle of Excluded Middle in Mathematics, 1923, and Intuitionistic Reflections on Formalism, 1927; Hilbert’s On the Infinite, 1925, and The Foundations of Mathematics, 1927; and Hermann Weyl’s comments on Hilbert’s second lecture on the foundations of mathematics, 1927. It is always interesting to read what the debating parties actually said. this school was called Logicism. In addition, Russell espoused a kind of Platonist realism, believing that all mathematical objects — even infinitary ones — have a real existence that we uncover through the study of mathematics. L. E. J Brouwer (1881-1966) held that some logical axioms — in particular, the law of excluded middle that says that every proposition is either true or false — do not hold when they concern infinitary mathematical objects. The truth of mathematical statements, like that of statements concerning the natural world, is to be established empirically by testing (even if only in principle), and some mathematical propositions regarding infinitary objects cannot be tested by finite mechanisms like the human mind. For example, determining whether a real number is equal to zero requires examining all infinity of digits in its decimal expansion, and thus the truth or falsity of that proposition cannot be determined. Brouwer uncovered just how much mathematics, infinitesimal calculus in particular, relies on such reasoning which he considered simply incorrect, and so much of mathematics had to be chucked. The school he founded was called Intuitionism. Due to the intense personal quarrel between the two, it is sometimes believed that Brouwer saw in Formalism the enemy of Intuitionism. This is false. ‘While logicism and intuitionism were too far apart to allow a dialogue between them,’ Heijenoort writes (p. 490), ‘the emergence of Hilbert’s meta-mathematics created between Hilbert and Brouwer a ground on which a discussion could proceed.’ So much so that in Intuitionistic Reflections on Formalism, 1927, he writes that he expects the differences between the two ‘will vanish’. For example, he thought that the two philosophies agreed that in ‘intuitive’ or ‘contentual’ mathematics, the law of excluded middle can be applied ‘thoughtlessly’ only to finite objects. Finally, David Hilbert (1862-1943) found a way to admit classical mathematics while still rejecting the reality of infinitary objects with his philosophy of Formalism. He contended that mathematics comprises two kinds statements: “real” propositions — regarding finitary objects and relating to observations about the real world — and “ideal” propositions — regarding infinitary objects. As long as the ideal propositions are consistent with the real ones, and as long as the inferences used are finitary (i.e., operate on finite logical statements and are of finite length), then ideal propositions are allowed, even though they do not contain any real meaning, in the sense of referencing real objects. What matters is not the “real” correctness of the mathematical objects, but the reality and correctness of the laws of deriving mathematical statements.For a discussion of the deeper philosophical difference between Hilbert’s and Frege’s points of view, see The Frege-Hilbert Controversy, in the Stanford Encyclopedia of Philosophy.

It should be noted that all three schools, Logicism, Intuitionism and Formalims, suffer from severe flaws. For example, it turns out that formalizing mathematics requires some axioms (about the non-finiteness of some collections of objects and various induction rules) that are decidedly mathematical rather than purely logical in nature, not to mention the variant requiring that one should accept the Platonic reality of mathematical objects; intuitionism is arbitrary, and except for simply rejecting the law of excluded middle cannot prescribe a non-contrived philosophical principle (as opposed to a creed) by which it can be unambiguously determined which axioms and inferences are admissible or notFor example, in modern terminology all natural numbers are intuitionistically ‘realized’. This cannot be philosophically justified by anything other than what is an equivalent restatement of this axiom, or by a creed like that attributed to Leopold Kronecker of ‘God made the integers, all else is the work of man,’ here taken to mean ‘God made all the integers’. The fact that it is more obvious than the law of excluded middle, as it is logically weaker, does not make it actually obvious, and the philosophical principle by which the former is obvious enough to be an axiom while the latter is not is unclear. That the principles of intuitionism coincide with the notion of computability is not a philosophical justification because computability — as pointed out by Turing himself (Lecture to the London Mathematieal Society on 20 February 1947, p. 1) — is just a convenient approximation of a stronger notion (of feasibility). Indeed, in recent decades feasibility has replaced computability as the central theoretical notion in computer science. Put bluntly, intuitionism cannot explain why classical mathematics must be abandoned on the grounds of philosophical/physical fidelity in favor of intuitionism, while intuitionism should not be abandoned, by the same justification, in favor of ultrafinitism, or conversely, if intuitionism is an acceptable mathematical approximation of the reality of ultrafinitism, why is it that classical mathematics is not. ; Formalism relies on an assumption of consistency in mathematics that, as it turns out, can never be proven. None of the three (and the many other schools in the philosophy of mathematics established since) can be said to be clearly superior to the others or more obviously correct, and so the choice comes down to an aesthetic (perhaps Leibniz would call it “emotional”) preference. But much more importantly — at least to my focus as a programmer — the choice of a mathematical foundation cannot possibly have any effect whatsoever when its subject matter is computation. Computation — from Hobbes to Turing — is tied to the physical, and were a mathematical system to yield a result falsified in the physical world, it would be discarded immediately; all foundations must necessarily yield the same (physical) result. Thus, as far as computation is concerned, inasmuch as different mathematical philosophies prescribe the use of different formalisms, the choice among them should be purely pragmatic and aesthetic, and possibly not universal — one should pick whatever language is convenient and appealing.

The source of those disputes was a fundamental feature of Frege’s formal logic — and also of Boole’s and Leibniz’s. While Frege’s formal deduction was no doubt mechanical or “algorithmic”, arguably even more so than Boole’s system, in Frege’s logic — as in Boole’s — the mechanical rules of inference correspond to the “laws of thought,” but each and every symbol, sentence or part of a sentence, including the axioms and the inference rules themselves must also have a contentual meaning; they must directly refer to an intuitive mental notion (like a set, a function, intersection, addition etc.). “Calculation becomes deduction,” but “it only becomes possible at all after the mathematical notation has, as a result of genuine thought, been so developed that it does the thinking for us, so to speak,” and, “[e]veryone who uses words or mathematical symbols makes the claim that they mean something, and no one will expect any sense to emerge from empty symbols.” It is this obligation that every symbol or a combination of symbols must be meaningful in an intuitive sense — and it is that intuitive content that justifies the logic — that invites controversy over axioms and inference rules, as the axioms and inference rules must make intuitive sense. And what makes intuitive sense to one, may not make sense to another. This contentual or conceptual basis for the entire theory was the root of the deep disputes over formal systems.

Indeed, the meaning of symbols and its relation to the signs was a topic of great interest to Frege. When pondering the meaning of the equality sign, Frege observed that symbols and statements in both his Begriffschrift and natural language have two kinds of meanings, which he calls sense and reference (or denotation in some translations):

On Sense and Reference, pp. 36-38, 1892

A portrait of Gottlob Frege by Renee Bolinger, paired with Van Gogh’s Starry Night, as a pun on Frege’s famous puzzle about the significance of learning that ‘Hesperus is Phosphorus.’ (source: the artist’s website; used with permission)Equality gives rise to challenging questions which are not altogether easy to answer. Is it a relation? A relation between objects, or between names or signs of objects? In my Begriffsschrift I assumed the latter. The reasons which seem to favour this are the following: $a = a$ and $a = b$ and are obviously statements of differing cognitive value; $a = a$ holds a priori and, according to Kant, is to be labelled analytic, while statements of the form $a = b$ often contain very valuable extensions of our knowledge and cannot always be established a priori. The discovery that the rising sun is not new every morning, but always the same, was one of the most fertile astronomical discoveries… Now if we were to regard equality as a relation between that which the names ‘a’ and ‘b’ designate, it would seem that $a = b$ could not differ from $a = a$ (i.e. provided $a = b$ is true). A relation would thereby be expressed of a thing to itself, and indeed one in which each thing stands to itself but to no other thing. What is intended to be said by $a = b$ seems to be that the signs or names ‘a’ and ‘b’ designate the same thing, so that those signs themselves would be under discussion; a relation between them would be asserted. But this relation would hold between the names or signs only in so far as they named or designated something. It would be mediated by the connexion of each of the two signs with the same designated thing… If the sign ‘a’ is distinguished from the sign ‘b’ only as object (here, by means of its shape), not as sign (i.e. not by the manner in which it designates something), the cognitive value of $a = a$ becomes essentially equal to that of $a = b$, provided $a = b$ is true. A difference can arise only if the difference between the signs corresponds to a difference in the mode of presentation of that which is designated…It is natural, now, to think of there being connected with a sign (name, combination of words, letter), besides that to which the sign refers, which may be called the reference of the sign, also what I should like to call the sense of the sign, wherein the mode of presentation is contained… The reference of ‘evening star’ would be the same as that of ‘morning star,’ but not the sense.Frege used the terms ‘morning star’ (Morgenstern) and ‘evening star’ (Abendstern); later philosophers changed the example to use the proper names Hesperus and Phosphorus, both referring to the planet Venus.

… The regular connexion between a sign, its sense, and its reference is of such a kind that to the sign there corresponds a definite sense and to that in turn a definite reference, while to a given reference (an object) there does not belong only a single sign… It may perhaps be granted that every grammatically well-formed expression representing a proper name always has a sense. But this is not to say that to the sense there also corresponds a reference. The words ‘the celestial body most distant from the Earth’ have a sense, but it is very doubtful if they also have a reference.This corresponds to a non-terminating term in a programming language; it has a computational sense, but not denotation (unless some denotation, such as $\bot$, is artificially assigned to it). The expression ‘the least rapidly convergent series’ has a sense but demonstrably has no reference, since for every given convergent series, another convergent, but less rapidly convergent, series can be found. In grasping a sense, one is not certainly assured of a reference.

On Sense and Reference, pp. 42-44 … We are therefore driven into accepting the truth value of a sentence as constituting its reference. By the truth value of a sentence I understand the circumstance that it is true or false. There are no further truth values. For brevity I call the one the True, the other the False. Every declarative sentence concerned with the reference of its words is therefore to be regarded as a proper name, and its reference, if it has one, is either the True or the False.

… If our supposition that the reference of a sentence is its truth value is correct, the latter must remain unchanged when a part of the sentence is replaced by an expression having the same reference. And this is in fact the case. Leibniz gives the definition: ‘Eadem sunt, quae sibi mutuo substitui possunt, salva veritate.’Same are those that, when substituted for one another, preserve the truth. What else but the truth value could be found, that belongs quite generally to every sentence if the reference of its components is relevant, and remains unchanged by substitutions of the kind in question?This paragraph is possibly the earliest treatment of what’s known as ‘referential transparency,’ a term that has recently been subject to much misinterpretation. Virtually all programming languages are referentially transparent as long as they do not employ syntactic macros, while some useful mathematical logics — for example, the modal logics — are not, for reasons covered by Frege in this paper, namely, that they have the ability to talk about sentences. The term as now used by some in the context of functional programming is actually misapplied to refer to the particular denotation used in functional languages (in which a function application denotes the value that function evaluates to), and is of no general theoretical interest.

If now the truth value of a sentence is its reference, then on the one hand all true sentences have the same reference and so, on the other hand, do all false sentences. From this we see that in the reference of the sentence all that is specific is obliterated. We can never be concerned only with the reference of a sentence; but again the mere thought alone yields no knowledge, but only the thought together with its reference, i.e. its truth value. Judgments can be regarded as advances from a thought to a truth value…. One might also say that judgments are distinctions of parts within truth values. Such distinction occurs by a return to the thought. To every sense belonging to a truth value there would correspond its own manner of analysis.

On Sense and Reference, p. 56 … Let us return to our starting point.

When we found ‘$a = a$’ and ‘$a = b$’ to have different cognitive values, the explanation is that for the purpose of knowledge, the sense of the sentence, viz., the thought expressed by it, is no less relevant than its reference, i.e. its truth value. If now $a = b$, then indeed the reference of ‘b’ is the same as that of ‘a,’ and hence the truth value of ‘$a = b$’ is the same as that of ‘$a = a$.’ In spite of this, the sense of ‘b’ may differ from that of ‘a’, and thereby the thought expressed in ‘$a = b$’ differs from that of ‘$a = a$.’ In that case the two sentences do not have the same cognitive value. If we understand by ‘judgment’ the advance from the thought to its truth value, as in the above paper, we can also say that the judgments are different.

In the context of programming languages, J.Y. Girard (Proofs and Types, pp. 1-3), presents the reference of a program term as its denotational semantics and its sense as operational semantics. Frege points out that different senses corresponding to the same referent require different manners of analysis and in formal logic, sentences of different senses referring to the same truth value True, require different “judgments”, i.e., different proofs. A formal proof — one that is just a sequence of application of various syntactic inference rules — corresponds to computation; as Frege says, “calculation becomes deduction”.Frege does not relate sense to computation, but it is done by Girard, mentioned in the above note, and by Y. N. Moschovakis, in his 1994 Sense and denotation as algorithm and value.

Another problem with Fregeian tradition of formal logic that stood (and stands to this day) in the way of fulfilling Leibniz’s dream was that, with time, as logical systems grew ever more elaborate and sophisticated, they’ve become arcane and ever more estranged from the work of practitioners — namely mathematicians who, by and large, have rejected any and all formalisms. Emil Post wrote,Recursively enumerable sets… and their decision problems, p. 284, 1944 “That mathematicians generally are oblivious to the importance of this work… as it affects the subject of their own interest is in part due to the forbidding, diverse and alien formalisms in which this work is embodied”, and John von Neumann wrote,The General and Logical Theory of Automata, 1948 or 1951, in Collected Works, Volume 5, p. 303 “Everybody who has worked in formal logic will confirm that it is one of the technically most refractory parts of mathematics.”

Calculation Becomes Deduction

Work on formal logic in the style of Frege continued with Whitehead and Russell (who found an inconsistency, “Russell’s paradox in Frege’s mathematical foundation, which Frege took in strideSee Russell’s letter to Frege, 1902 (From Frege to Gödel, pp. 124-125 ), and Frege’s response (From Frege to Gödel, pp. 127-128), where he wrote: Your discovery of the contradiction caused me the greatest surprise and, I would almost say, consternation, since it has shaken the basis on which I intended to build arithmetic… I must reflect further on the matter. It is all the more serious since, with the loss of my Rule V, not only the foundations of my arithmetic, but also the sole possible foundations of arithmetic, seem to vanish. Yet, I should think, it must be possible to set up conditions… such that the essentials of my proofs remain intact. In any case your discovery is very remarkable and will perhaps result in a great advance in logic, unwelcome as it may seem at first glance. ), Hilbert, Paul Bernays (1888-1977), Leopold Löwenheim (1878-1957), Thoralf Skolem (1887-1963), and later Kurt Gödel and Gerhard Gentzen (1909-1945). In the meantime, abstract algebra developed independently with progress in group theory and Alfred North Whitehead’s 1898 publication of A Treatise On Universal Algebra, but with the development of Frege’s formal logic, logic was no longer identified with algebra as strongly as it had been during the nineteenth century (although many of those working on formal logic also did significant work in algebra), and so we will leave algebra behind. Computation and logic, however, were still considered a single subject.

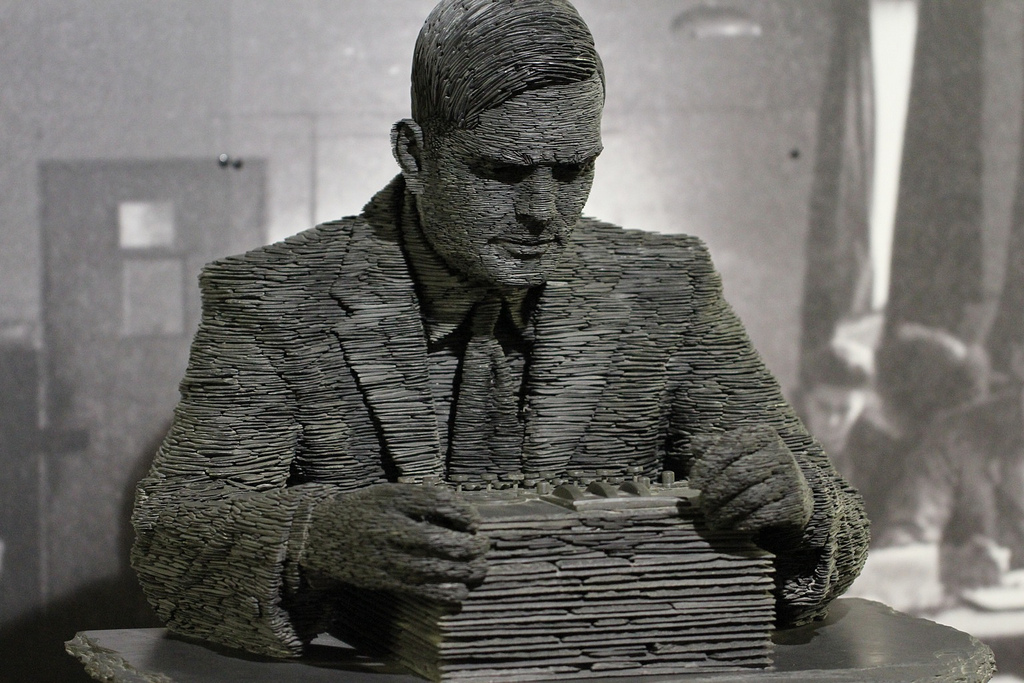

Robin Gandy (1919-1995), Alan Turing’s friend and only student wrote a fascinating paper in which he summarized precisely who knew what, when and how in the years leading to the great discoveries in computation in 1936. It was clear to Hilbert and other logicians of his time (as it had been to Leibniz) that formal proof is a form of computation:

Quoted and translated by Robin Gandy in The Confluence of Ideas in 1936, pp. 91-92 [S]tarting with [The Foundations of Logic and Arithmetic,] 1905, [Hilbert] developed his ‘Beweistheorie’. The logical apparatus as well as the mathematical subject matter had to be formalized; formal proofs are then finite objects. Metamathematics is the study of these objects, and here Hilbert accepted Kronecker’s restrictions to ‘finitistic’ methods. Operations on formal proofs (such as reduction to a normal form) are to be (in an intuitive sense of the word) recursive; relations between (parts of) proofs are to be decidable; the prime method of metamathematical proof is induction on the construction of formal proofs. Although (prior to 1931) Hilbert and his group did not code proofs by numbers, the connection with numerical calculation was fairly clear. By careful arrangement of the data, algebraic and symbolic operations can be reduced (as Babbage had realized) to numerical ones. A general theory of proofs would require the investigation and classification of effectively calculable processes.

Hilbert, who had already posed a famous algorithmic question in 1900, was impressed with the progress made in formal logic and the increased rigor in mathematics it has brought, and raised an algorithmic question on the nature of formal logic itself, and, indeed, the core of mathematics, concerning with the capabilities and limits of formal systems. The famous Entscheidungsproblem, the decision problem, saw several manifestations in the writings and talks of Hilbert and his students as well as others previously (Stephen Kleene writesGödel’s impression on students of logic in the 1930’s, p. 56 , “The Entscheidungsproblem for various formal systems had been posed by Schröder [in] 1895, Löwenheim [in] 1915, and Hilbert [in] 1918.”), but was given a definitive expression in a 1928 textbook Hilbert wrote with Wilhelm Ackermann (1896-1962):

Quoted and translated by Robin Gandy in The Confluence of Ideas in 1936, p. 58 The Entscheidungsproblem is solved if one knows a procedure which will permit one to decide, using a finite number of operations, on the validity, respectively the satisfiability of a given [first-order] logical expression.

Hilbert was hopeful regarding a positive solution to the Entscheidungsproblem (though he did not assume it), famously saying in a recorded 1930 address that there are no unsolved problems in mathematics, but others did not share that optimism. More generally, on the question of whether there are any unsolvable problems in mathematics, Brouwer wrote:

Quoted in The Confluence of Ideas in 1936, p. 61 It follows that the question of the validity of the principium tertii exclusi is equivalent to the question whether unsolvable mathematical problems can exist. There is not a shred of proof for the conviction which has sometimes been put forward that there exist no unsolvable mathematical problems.

Others, like John von Neumann (1903-1957), were particularly skeptical of a positive outcome, writing in 1927:

Quoted and translated by Robin Gandy in The Confluence of Ideas in 1936, p. 62 So it appears that there is no way of finding a general criterion for deciding whether or not a well-formed formula is a theorem. (We cannot at the moment prove this. We have no clue as to how such a proof of undecidability would go.) But this ignorance does not prevent us from asserting: As of today we cannot in general decide whether an arbitrary well-formed formula can or cannot be proved from the axiom schemata given below. And the contemporary practice of mathematics, using as it does heuristic methods, only makes sense because of this undecidability. When the undecidability fails then mathematics, as we now understand it, will cease to exist; in its place there will be a mechanical prescription for deciding whether a given sentence is provable or not.

G. H. Hardy (1877-1947) similarly said in a Cambridge lecture in 1928:

Quoted in The Confluence of Ideas in 1936, p. 62 Suppose, for example, that we could find a finite system of rules which enabled us to say whether any given formula was demonstrable or not. This system would embody a theorem of metamathematics. There is of course no such theorem and this is very fortunate, since if there were we should have a mechanical set of rules for the solution of all mathematical problems, and our activities as mathematicians would come to an end.

Hilbert’s belief in the solvability of all mathematical questions was shattered with the publication of the incompleteness theorems by Kurt Gödel (1906-1978), who first announced his result a day before Hilbert’s hopeful address. While, at the time, no one understood the result and its significance except for John von NeumannSolomon Feferman, The nature and significance of Gödel’s incompleteness theorems, p. 10 , it was a double-blow to Hilbert’s program of mechanizing mathematics. Gödel first showed that unsolvable formal mathematical questions do exist, and then showed that the consistency of a formal system — which Hilbert viewed as the keystone of his philosophy of Formalism — cannot be proven within the system itself (i.e., using its inference rules).

Gödel’s paperKurt Gödel, On Formally Undecidable Propositions of Principia Mathematica and Related Systems I, 1931 in From Frege to Gödel, pp. 596-616, or, in a different translation and modernized notation and mathematical terminology, by Martin Hirzel here. is very important to our discussion of the relationship between logic and computation, but its interesting parts are technical and should not be quoted partially, while its quotable parts are less interesting, so I will briefly summarize the pertinent ideas.

A portrait of Kurt Gödel by Renee Bolinger, rendered in the Art-Nouveau style, for its emphasis on recursion, since they echo Gödel’s prominent work in recursive proofs in logic. (source: the artist’s website; used with permission)

Gödel uses the predicate calculus (“Hilbert’s functional calculus”) with the Peano axioms of arithmetic as a multi-sorted logic, so variables are of three sorts: a sort-1 variable stands for a natural number, a sort-2 variable for a set of natural numbers, sort-3 variables for a set-of-sets of natural numbers and so on (he makes do with only three sorts). He then programs a meta-circular evaluator for this logic, i.e., he programs an interpreter for this logic in the logic itself, encoding formulas and proofs as natural numbers. He does so by first defining the notion of a recursive function (primitive recursive in modern terminology) — without making any claims about the supposed universality of recursive functions, i.e., that every “calculable” function is a primitive recursive one; indeed it isn’t — and shows that those can be formally defined and manipulated in the logic. He then encodes formulas and proofs as numbers, representing a proof as a sequence of formulas, each immediately inferrable from the previous ones; thus, when encoded, proofs become numbers — mathematical objects of the logic that can be discussed and manipulated within the logic itself. Finally, and most importantly, he represents the inference rules themselves as primitive-recursive functions. Using those he defines a relation , which means that $x$ is (an encoding of) a proof of (the encoding of) formula $y$. The operator $Bew$ is a “proof checker” programmed in the logic. He then defines an operator $Bew(y)$ as $\exists x . xBy$ which means that $y$ encodes a provable formula, which is used to show his main result, that in a consistent logic (one that cannot prove both a sentence and its negation) there are true sentences that aren’t provable, by encoding the sentence, “this sentence is unprovable.” The first inconsistency theorem states that a logic rich enough to express arithmetic can have sentences that are true (for some particular definition of truth) yet are not provable, and the second says that such a logic cannot prove its own consistency.

Gödel completed Leibniz’s goal of showing that logic can be turned into arithmetic, and deduction into calculation. Note, however, that Gödel’s proof relies specifically on the predicate calculus.

With the incompleteness theorems the chances of a positive answer to the Entscheidungsproblem all but vanished. The theorems showed that there are formal propositions that can neither be proven nor refuted, but did not completely rule out the possibility of an algorithm deciding the status of a given proposition (provable, disprovable or neither). Still, if it were guaranteed that some certificate (proof or disproof) exists for every logical sentence, it would make sense to ask whether it could be found mechanically; if one may not even exist, the chances of mechanically determining the formula’s status seem slim. Gandy writes that,

The Confluence of Ideas in 1936, pp. 63-64

David Hilbert’s tombstone, with the inscription: We must know, we shall know (source: Wikipedia, By Kassandro CC BY-SA 3.0)Gödel’s result meant that it was almost inconceivable that the Entscheidungsproblem should be decidable: a solution could, so to speak, only work by magic.A natural question to ask is why neither Gödel nor von Neumann proved the undecidability of the Entscheidungsproblem. Von Neumann was certainly excited by Gödel’s lecture at Königsberg and was the first person fully to understand Gödel’s results… But by then he was mainly interested in other branches of mathematics — after 1931 he did not, for many years, publish any papers about logic. More importantly, I think, his mind moved too fast for him to be able to tackle what is, in effect, a philosophical problem: one needed to reflect long and hard on the idea of calculability.

… Gödel was primarily concerned — in opposition to the climate of the time — with the analysis of nonfinitist concepts and methods… But a concern with nonfinitary reasoning is not what is needed for an analysis of calculations. Gödel admired and accepted Turing’s analysis, but it is not surprising that he did not anticipate it. Indeed, to the end of his life he believed that we might be able to use nonfinitary reasoning in (nonmechanical) calculations.

As Gandy explains, providing a negative answer — now the expected result — to the Entscheidungsproblem required defining precisely what was meant by the vague and intuitive notion of a calculation.

The road to tackling that problem began with attempts to simplify logic. Inspired by the success of reducing the propositional calculus to just a single connective (NAND), Moses Schönfinkel (1889–1942) attempted to simplify the Frege/Russell/Hilbert-style predicate calculus.

On the Building Blocks of Mathematical Logic, 1924, in From Frege to Gödel pp. 356-360 It is in the spirit of the axiomatic method as it has now received recognition, chiefly through the work of Hilbert, that we not only strive to keep the axioms as few and their content as limited as possible but also attempt to make the number of fundamental undefined notions as small as we can; we do this by seeking out those notions from which we shall best be able to construct all other notions of the branch of science in question. Understandably, in approaching this task we shall have to be appropriately modest in our demands concerning the simplicity of the initial notions.

… That the reduction to a single fundamental connective is nevertheless entirely possible provided we remove the restriction that the connective be taken only from the sequence above [of negation, conjunction, disjunction, implication and equivalence] was discovered not long ago by Sheffer (1913).

… We are led to the idea, which at first glance certainly appears extremely bold, of attempting to eliminate by suitable reduction the remaining fundamental notions, those of proposition, propositional function, and variable, from those contexts in which we are dealing with completely arbitrary, logically general propositions … To examine this possibility more closely and to pursue it would be valuable not only from the methodological point of view that enjoins us to strive for the greatest possible conceptual uniformity but also from a certain philosophic, or, if you wish, aesthetic point of view.

… It seems to me remarkable in the extreme that the goal we have just set can be realized also; as it happens, it can be done by a reduction to three fundamental signs.

… It will … be necessary to leave our problem at the point reached above and to develop first a kind of function calculus [Funktionenkalkul] ; we are using this term here in a sense more general than is otherwise customary.

As is well known, by function we mean in the simplest case a correspondence between the elements of some domain of quantities, the argument domain, and those of a domain of function values … such that to each argument value there corresponds at most one function value. We now extend this notion, permitting functions themselves to appear as argument values and also as function values. We denote the value of a function $f$ for the argument value $x$ by simple juxtaposition of the signs for the function and the argument, that is, by

Functions of several arguments can, on the basis of our extended definition of function, be reduced to those of a single argument in the following way.

We shall regard

for example, as a function of the single argument $y$, say, but not as a fixed given function; instead, we now consider it to be a variable function that depends on $x$ for its form. (Of course we are here concerned with a dependence of the function, that is, of the correspondence itself; we are not referring to the obvious dependence of the function value upon the argument.)

… We therefore write

in our symbolism, or, by agreeing … that parentheses around the left end of such a symbolic form may be omitted, more simply,

here the new function, $f$, must be clearly distinguished from the former one, $F$.

After introducing the device, first discussed by FregeThe Basic Laws of Arithmetic, p. 94: §35. Representation of second-level functions by first-level functions. and that we today call currying, to transform a multivariate function into a function that maps a single argument to another single-argument function, Schönfinkel introduces his calculus — today known as the SKI combinator calculus — which can express all the same sentences expressible in the predicate calculus by means of composing only two fundamental “functions”, S and K, where K is a constant function, and S is a substitution operator. The calculus has no other quantifiers and no free variables.